GenSim2:

Scaling Robot Data Generation

with Multi-modal and Reasoning LLMs

Abstract

Robotic simulation today remains challenging to scale up due to the human efforts required to create diverse simulation tasks and scenes. Simulationtrained policies also face scalability issues as many sim-to-real methods focus on a single task. To address these challenges, this work proposes GenSim2, a scalable framework that leverages coding LLMs with multi-modal and reasoning capabilities for complex and realistic simulation task creation, including longhorizon tasks with articulated objects. To automatically generate demonstration data for these tasks at scale, we propose planning and RL solvers that generalize within object categories. The pipeline can generate data for up to 100 articulated tasks with 200 objects and reduce the required human efforts.

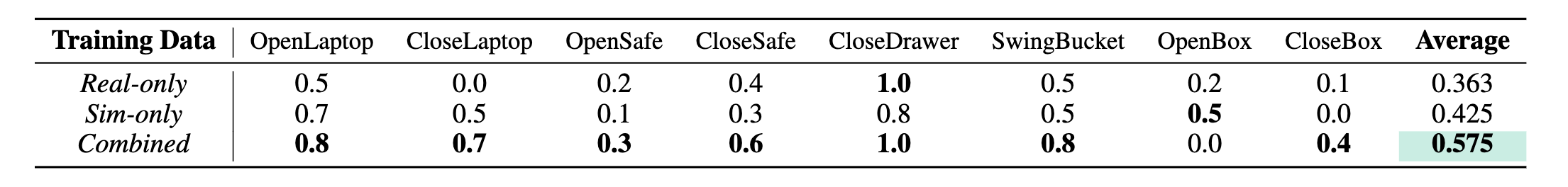

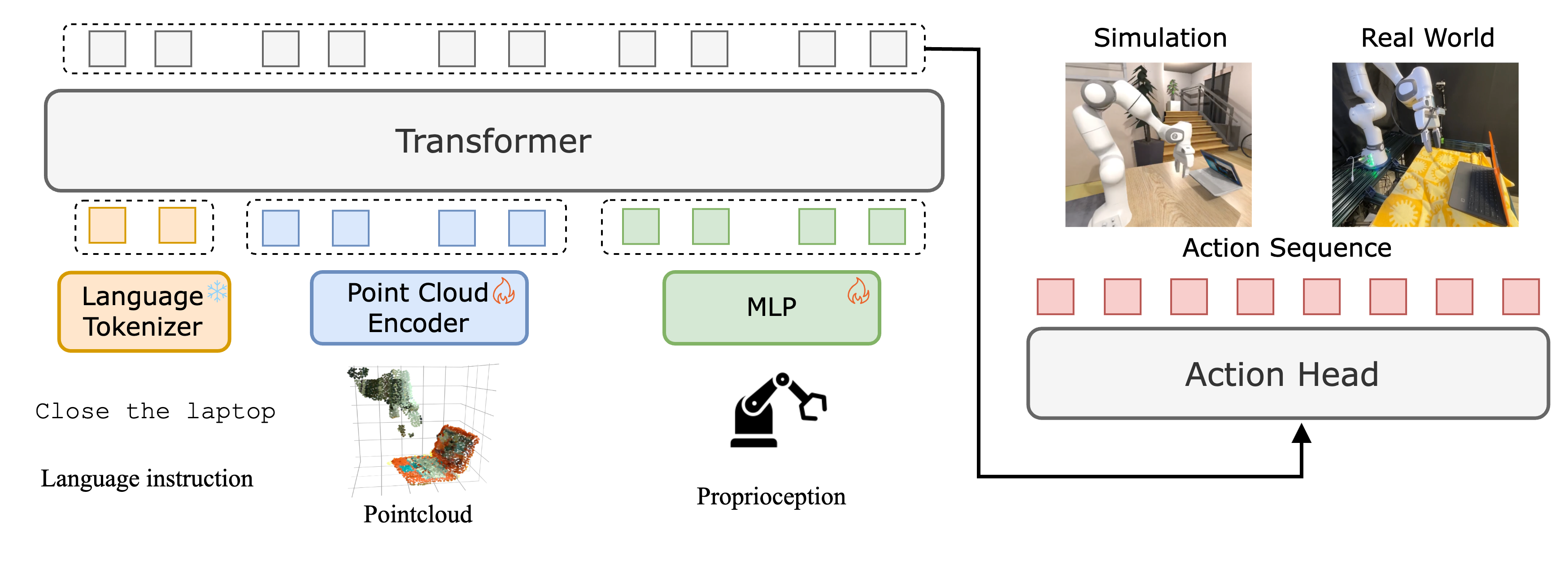

To utilize such data, we propose an effective multi-task language-conditioned policy architecture, dubbed proprioceptive point-cloud transformer (PPT), that learns from the generated demonstrations and exhibits strong sim-to-real zero-shot transfer. Combining the proposed pipeline and the policy architecture, we show a promising usage of GenSim2 that the generated data can be used for zero-shot transfer or co-train with real-world collected data, which enhances the policy performance by 20% compared with training exclusively on limited real data.

Generated Task Library

Primitive Tasks

Long-horizon Tasks

Real-Robot Experiments

Real Only

Sim+Real

Compared to using only 10 real-world trajectories, incorporating generated simulation data enhances the generalization of real-world policies across multiple tasks. Tasks shown here are executed using a multi-task policy.

GenSim2 Framework

The GenSim2 framework consists of (1) task proposal, (2) solver creation, (3) multi-task training, and (4) generalization and sim-to-real transfer.

GenSim2 Solver Generation Pipeline

Multi-modal task solver generation pipeline that utilizes GPT-4 and optimization configurations for scalable manipulation task solutions.

Planner Overview

We demonstrate how to leverage the keypoint planner to solve the OpenBox task. Initially, constraints are defined to ensure the gripper contacts the box lid. Based on this actuation pose, specific motions are assigned to complete the task of opening the box.

Proprioceptive Pointcloud Transformer

The proposed Proprioception Point cloud Transformer (PPT) policy architecture maps language, point cloud, and proprioception inputs in a shared latent space for action prediction.

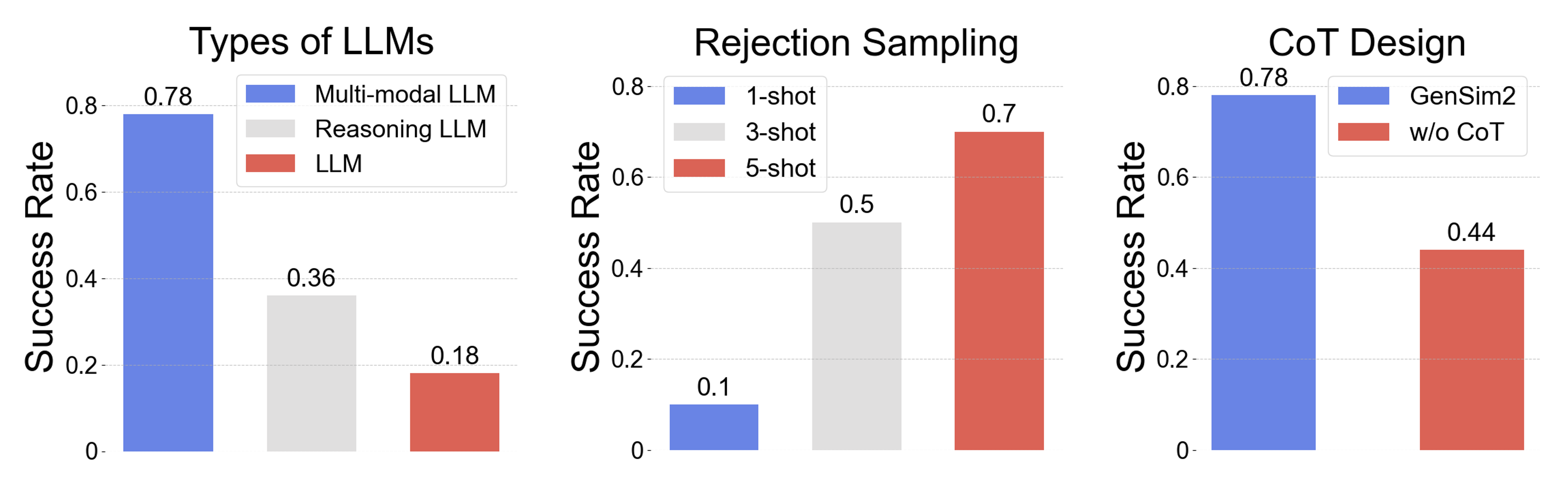

Experiments

BibTeX

@inproceedings{huagensim2,

title={GenSim2: Scaling Robot Data Generation with Multi-modal and Reasoning LLMs},

author={Hua, Pu and Liu, Minghuan and Macaluso, Annabella and Lin, Yunfeng and Zhang, Weinan and Xu, Huazhe and Wang, Lirui},

booktitle={8th Annual Conference on Robot Learning}

}